plume tracking

Papers and Code

Airflow Source Seeking on Small Quadrotors Using a Single Flow Sensor

Jan 22, 2026As environmental disasters happen more frequently and severely, seeking the source of pollutants or harmful particulates using plume tracking becomes even more important. Plume tracking on small quadrotors would allow these systems to operate around humans and fly in more confined spaces, but can be challenging due to poor sensitivity and long response times from gas sensors that fit on small quadrotors. In this work, we present an approach to complement chemical plume tracking with airflow source-seeking behavior using a custom flow sensor that can sense both airflow magnitude and direction on small quadrotors < 100 g. We use this sensor to implement a modified version of the `Cast and Surge' algorithm that takes advantage of flow direction sensing to find and navigate towards flow sources. A series of characterization experiments verified that the system can detect airflow while in flight and reorient the quadrotor toward the airflow. Several trials with random starting locations and orientations were used to show that our source-seeking algorithm can reliably find a flow source. This work aims to provide a foundation for future platforms that can use flow sensors in concert with other sensors to enable richer plume tracking data collection and source-seeking.

FIRE-VLM: A Vision-Language-Driven Reinforcement Learning Framework for UAV Wildfire Tracking in a Physics-Grounded Fire Digital Twin

Jan 06, 2026Wildfire monitoring demands autonomous systems capable of reasoning under extreme visual degradation, rapidly evolving physical dynamics, and scarce real-world training data. Existing UAV navigation approaches rely on simplified simulators and supervised perception pipelines, and lack embodied agents interacting with physically realistic fire environments. We introduce FIRE-VLM, the first end-to-end vision-language model (VLM) guided reinforcement learning (RL) framework trained entirely within a high-fidelity, physics-grounded wildfire digital twin. Built from USGS Digital Elevation Model (DEM) terrain, LANDFIRE fuel inventories, and semi-physical fire-spread solvers, this twin captures terrain-induced runs, wind-driven acceleration, smoke plume occlusion, and dynamic fuel consumption. Within this environment, a PPO agent with dual-view UAV sensing is guided by a CLIP-style VLM. Wildfire-specific semantic alignment scores, derived from a single prompt describing active fire and smoke plumes, are integrated as potential-based reward shaping signals. Our contributions are: (1) a GIS-to-simulation pipeline for constructing wildfire digital twins; (2) a VLM-guided RL agent for UAV firefront tracking; and (3) a wildfire-aware reward design that combines physical terms with VLM semantics. Across five digital-twin evaluation tasks, our VLM-guided policy reduces time-to-detection by up to 6 times, increases time-in-FOV, and is, to our knowledge, the first RL-based UAV wildfire monitoring system demonstrated in kilometer-scale, physics-grounded digital-twin fires.

Real-Time Instrument Planning and Perception for Novel Measurements of Dynamic Phenomena

Sep 03, 2025Advancements in onboard computing mean remote sensing agents can employ state-of-the-art computer vision and machine learning at the edge. These capabilities can be leveraged to unlock new rare, transient, and pinpoint measurements of dynamic science phenomena. In this paper, we present an automated workflow that synthesizes the detection of these dynamic events in look-ahead satellite imagery with autonomous trajectory planning for a follow-up high-resolution sensor to obtain pinpoint measurements. We apply this workflow to the use case of observing volcanic plumes. We analyze classification approaches including traditional machine learning algorithms and convolutional neural networks. We present several trajectory planning algorithms that track the morphological features of a plume and integrate these algorithms with the classifiers. We show through simulation an order of magnitude increase in the utility return of the high-resolution instrument compared to baselines while maintaining efficient runtimes.

Autonomous Drone for Dynamic Smoke Plume Tracking

Apr 17, 2025This paper presents a novel autonomous drone-based smoke plume tracking system capable of navigating and tracking plumes in highly unsteady atmospheric conditions. The system integrates advanced hardware and software and a comprehensive simulation environment to ensure robust performance in controlled and real-world settings. The quadrotor, equipped with a high-resolution imaging system and an advanced onboard computing unit, performs precise maneuvers while accurately detecting and tracking dynamic smoke plumes under fluctuating conditions. Our software implements a two-phase flight operation, i.e., descending into the smoke plume upon detection and continuously monitoring the smoke movement during in-plume tracking. Leveraging Proportional Integral-Derivative (PID) control and a Proximal Policy Optimization based Deep Reinforcement Learning (DRL) controller enables adaptation to plume dynamics. Unreal Engine simulation evaluates performance under various smoke-wind scenarios, from steady flow to complex, unsteady fluctuations, showing that while the PID controller performs adequately in simpler scenarios, the DRL-based controller excels in more challenging environments. Field tests corroborate these findings. This system opens new possibilities for drone-based monitoring in areas like wildfire management and air quality assessment. The successful integration of DRL for real-time decision-making advances autonomous drone control for dynamic environments.

COSMOS: A Data-Driven Probabilistic Time Series simulator for Chemical Plumes across Spatial Scales

May 28, 2025The development of robust odor navigation strategies for automated environmental monitoring applications requires realistic simulations of odor time series for agents moving across large spatial scales. Traditional approaches that rely on computational fluid dynamics (CFD) methods can capture the spatiotemporal dynamics of odor plumes, but are impractical for large-scale simulations due to their computational expense. On the other hand, puff-based simulations, although computationally tractable for large scales and capable of capturing the stochastic nature of plumes, fail to reproduce naturalistic odor statistics. Here, we present COSMOS (Configurable Odor Simulation Model over Scalable Spaces), a data-driven probabilistic framework that synthesizes realistic odor time series from spatial and temporal features of real datasets. COSMOS generates similar distributions of key statistical features such as whiff frequency, duration, and concentration as observed in real data, while dramatically reducing computational overhead. By reproducing critical statistical properties across a variety of flow regimes and scales, COSMOS enables the development and evaluation of agent-based navigation strategies with naturalistic odor experiences. To demonstrate its utility, we compare odor-tracking agents exposed to CFD-generated plumes versus COSMOS simulations, showing that both their odor experiences and resulting behaviors are quite similar.

Development and Application of Self-Supervised Machine Learning for Smoke Plume and Active Fire Identification from the FIREX-AQ Datasets

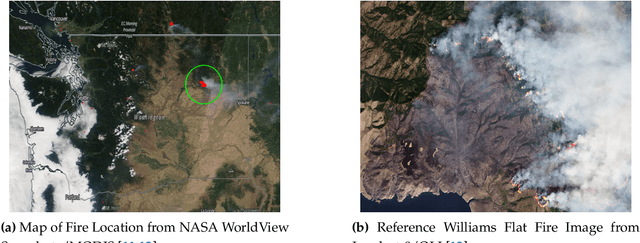

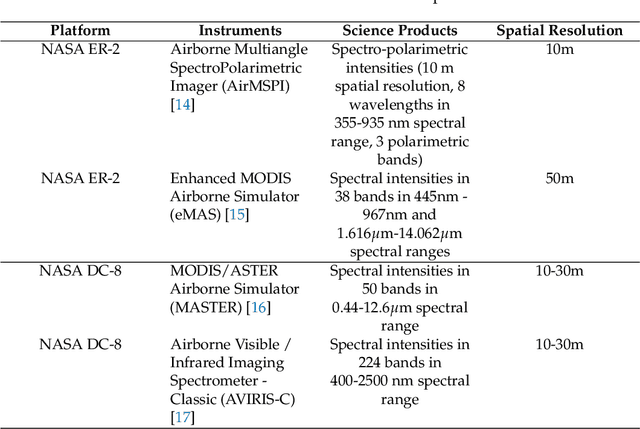

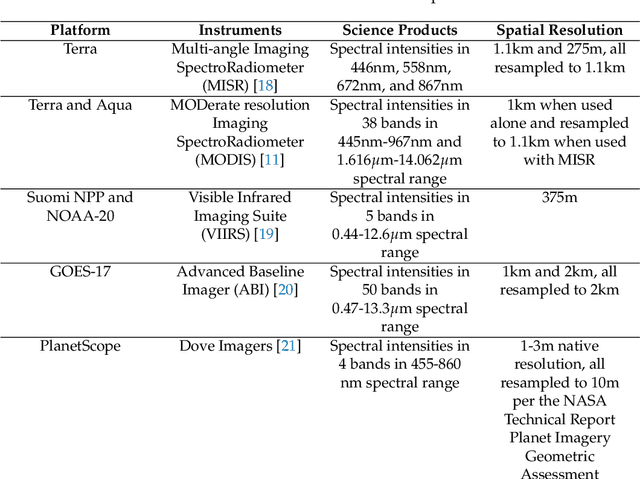

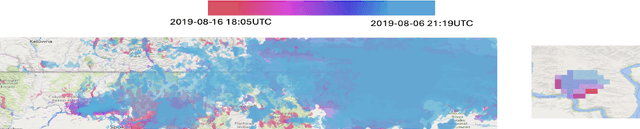

Jan 25, 2025

Fire Influence on Regional to Global Environments and Air Quality (FIREX-AQ) was a field campaign aimed at better understanding the impact of wildfires and agricultural fires on air quality and climate. The FIREX-AQ campaign took place in August 2019 and involved two aircraft and multiple coordinated satellite observations. This study applied and evaluated a self-supervised machine learning (ML) method for the active fire and smoke plume identification and tracking in the satellite and sub-orbital remote sensing datasets collected during the campaign. Our unique methodology combines remote sensing observations with different spatial and spectral resolutions. The demonstrated approach successfully differentiates fire pixels and smoke plumes from background imagery, enabling the generation of a per-instrument smoke and fire mask product, as well as smoke and fire masks created from the fusion of selected data from independent instruments. This ML approach has a potential to enhance operational wildfire monitoring systems and improve decision-making in air quality management through fast smoke plume identification12 and tracking and could improve climate impact studies through fusion data from independent instruments.

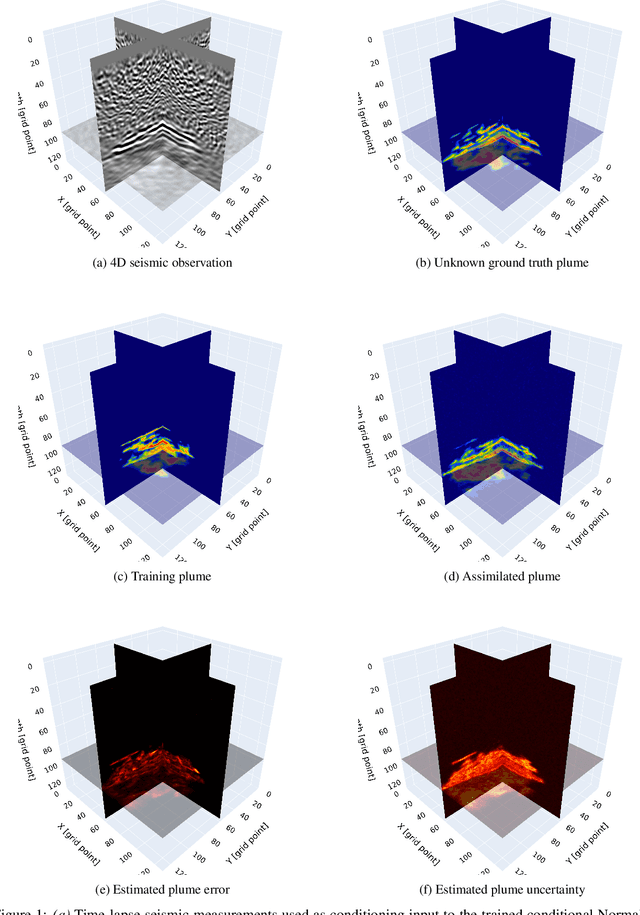

Advancing Geological Carbon Storage Monitoring With 3d Digital Shadow Technology

Feb 11, 2025

Geological Carbon Storage (GCS) is a key technology for achieving global climate goals by capturing and storing CO2 in deep geological formations. Its effectiveness and safety rely on accurate monitoring of subsurface CO2 migration using advanced time-lapse seismic imaging. A Digital Shadow framework integrates field data, including seismic and borehole measurements, to track CO2 saturation over time. Machine learning-assisted data assimilation techniques, such as generative AI and nonlinear ensemble Bayesian filtering, update a digital model of the CO2 plume while incorporating uncertainties in reservoir properties. Compared to 2D approaches, 3D monitoring enhances the spatial accuracy of GCS assessments, capturing the full extent of CO2 migration. This study extends the uncertainty-aware 2D Digital Shadow framework by incorporating 3D seismic imaging and reservoir modeling, improving decision-making and risk mitigation in CO2 storage projects.

Detection and tracking of gas plumes in LWIR hyperspectral video sequence data

Nov 01, 2024Automated detection of chemical plumes presents a segmentation challenge. The segmentation problem for gas plumes is difficult due to the diffusive nature of the cloud. The advantage of considering hyperspectral images in the gas plume detection problem over the conventional RGB imagery is the presence of non-visual data, allowing for a richer representation of information. In this paper we present an effective method of visualizing hyperspectral video sequences containing chemical plumes and investigate the effectiveness of segmentation techniques on these post-processed videos. Our approach uses a combination of dimension reduction and histogram equalization to prepare the hyperspectral videos for segmentation. First, Principal Components Analysis (PCA) is used to reduce the dimension of the entire video sequence. This is done by projecting each pixel onto the first few Principal Components resulting in a type of spectral filter. Next, a Midway method for histogram equalization is used. These methods redistribute the intensity values in order to reduce flicker between frames. This properly prepares these high-dimensional video sequences for more traditional segmentation techniques. We compare the ability of various clustering techniques to properly segment the chemical plume. These include K-means, spectral clustering, and the Ginzburg-Landau functional.

Fire Dynamic Vision: Image Segmentation and Tracking for Multi-Scale Fire and Plume Behavior

Aug 16, 2024

The increasing frequency and severity of wildfires highlight the need for accurate fire and plume spread models. We introduce an approach that effectively isolates and tracks fire and plume behavior across various spatial and temporal scales and image types, identifying physical phenomena in the system and providing insights useful for developing and validating models. Our method combines image segmentation and graph theory to delineate fire fronts and plume boundaries. We demonstrate that the method effectively distinguishes fires and plumes from visually similar objects. Results demonstrate the successful isolation and tracking of fire and plume dynamics across various image sources, ranging from synoptic-scale ($10^4$-$10^5$ m) satellite images to sub-microscale ($10^0$-$10^1$ m) images captured close to the fire environment. Furthermore, the methodology leverages image inpainting and spatio-temporal dataset generation for use in statistical and machine learning models.

Fish-inspired tracking of underwater turbulent plumes

Mar 15, 2024Autonomous ocean-exploring vehicles have begun to take advantage of onboard sensor measurements of water properties such as salinity and temperature to locate oceanic features in real time. Such targeted sampling strategies enable more rapid study of ocean environments by actively steering towards areas of high scientific value. Inspired by the ability of aquatic animals to navigate via flow sensing, this work investigates hydrodynamic cues for accomplishing targeted sampling using a palm-sized robotic swimmer. As proof-of-concept analogy for tracking hydrothermal vent plumes in the ocean, the robot is tasked with locating the center of turbulent jet flows in a 13,000-liter water tank using data from onboard pressure sensors. To learn a navigation strategy, we first implemented Reinforcement Learning (RL) on a simulated version of the robot navigating in proximity to turbulent jets. After training, the RL algorithm discovered an effective strategy for locating the jets by following transverse velocity gradients sensed by pressure sensors located on opposite sides of the robot. When implemented on the physical robot, this gradient following strategy enabled the robot to successfully locate the turbulent plumes at more than twice the rate of random searching. Additionally, we found that navigation performance improved as the distance between the pressure sensors increased, which can inform the design of distributed flow sensors in ocean robots. Our results demonstrate the effectiveness and limits of flow-based navigation for autonomously locating hydrodynamic features of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge